These pictures were taken at St. Peter’s Square. Not sure of the circumstances in which both pictures were taken & may not be correct to compare. However, one thing they truly indicate is that the number of connected devices have increased massively over the past decade. Now, with the tremendous increase in the number of IoT devices, the demand for wireless connectivity is going to be enormous.

If a wireless infrastructure provider wants to perfect their wireless network, it is not sufficient anymore to optimize for small numbers of users. Designs should be validated in High Density environments.

Let’s say the functionality of a wireless network/device is perfected/proven in low density and medium density environments.Can we then assume that it would show a similar degradation trend in a High Density environment too?

For example, consider a video performance test, where 75 users stream a 2000 Kbps video simultaneously. Everyone gets good video experience all are happy., Now can we say anything about the experience of 100 users, when they simultaneously stream a 1500 kbps video? Note that the total raw throughput required is the same Or If Uplink throughput with 5 Clients is 200 Mbps, how much will it be with 30 Clients?

The answer is that we cannot predict. The above examples are from some tests we ran with real devices in our lab. For video, the pass rate dropped from 100% to 30%!!! Iperf throughput under 100 client load dropped from 200 Mbps to 65 Mbps.

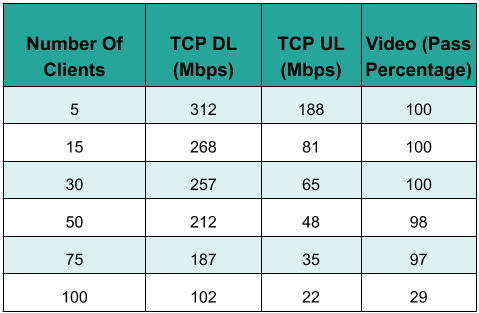

We want to find out what affects the performance when client density increases. We did an extensive study, where we actually tested the performance of a Wireless Network at various client loads. We tested TCP DL, TCP UL and Video Streaming performance at 5, 15, 30, 50, 75 and 100 Clients. Clients were a mix of 802.11n and 802.11ac clients (the clients we used were a mix of Intel and Qualcomm)

For all the tests, throughput expected of the AP was same. For example, for 15 Clients if we used 10,000 kbps Video, then for 100 Clients we used 1500 Kbps video

The performance trend while scaling was as follows

While there was almost a constant decline in TCP DL throughput from 300 Mbps to 100 Mbps, UL throughput fell sharply from 5 clients to 30 Clients and after that showed a normal degradation. Video streaming was fine up to 75 Client load level but fell significantly from 100% to 30% while going from 75 clients to 100 Clients.

With these tests, it is clear that the performance degradation patterns with the number of clients are not linear. Hence the only way to test the network performance that support real world scenarios is to measure it in a High Density environment.

Now we wanted to find out what is it in scaling that affects performance so drastically? To find out that, we also captured wifi packets using Airtool in MAC (FYI, MAC gives awesome packet capture performance!) for each test. For each test we measured,

- Average Packet Size

- Number of Management Frames

- Number of control Frames

- Number of WiFi retries

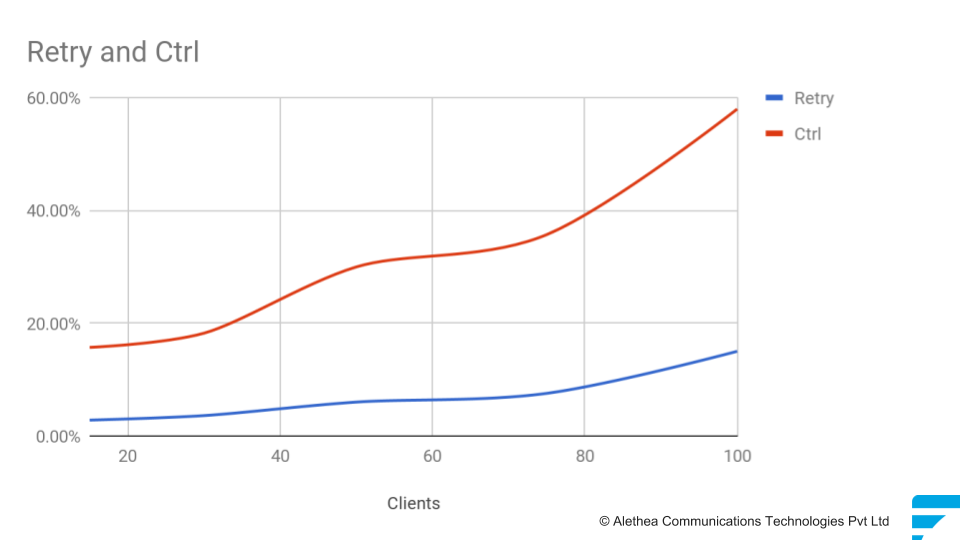

For uplink TCP throughput, there were materially significant increases in control frames and WiFi retries. There was a minor increase in management frames but that is not too significant.

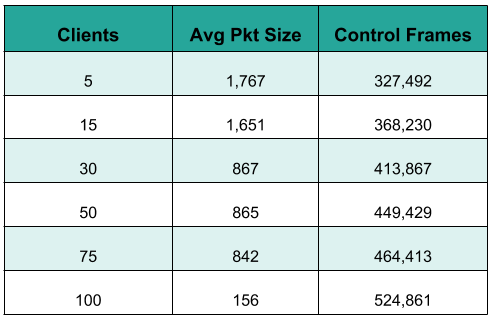

For downlink TCP throughput, there was not much increase in WiFi retries. There was a constant increase in number of control frames, but that is minor compared to the degradation in Average Packet Size. Average Packet Size reduces drastically and shows proportional degradation as the throughput performance.

For Video Streaming performance, again Average Packet Size was the most important factor. There was increase in control frames, but that was minor compared to change in Average Packet Size.

When we further did the Root Cause Analysis, we found that increasing number of control packets and retries in Uplink was due to un-coordinated uplink transmissions between several users. Since WiFi protocol is based on contention resolution it seems logical. Also there are no ways of managing client aggression in uplink.

Similarly in downlink dominated traffic streams, for fairness it would make sense for access point to send lesser amount of data per client, so as to address all clients. ATF (Air Time Fairness) for example, enhances such a behaviour.

These issues can surely be improved by advancements in Radio and Physical Layer. However there can be simple solutions at higher layers, e.g. in uplink, managing TCP acks can help. With an algorithm to drop TCP acks, uplink aggression of clients can be managed.

Similarly for downlink an effective QoS based scheduler, which uses information on near past trends and accordingly serves each client, can make lot of difference.

However, in the end most important is to test the network in high density environments. Ideally, this testing should be done with real clients and measuring performance of real applications – browsing, video streaming, throughput, Voice Over IP, Multicast streaming and any other application that really matters to the target user segment. However, it is time consuming and involves a lot of effort. Emulated clients offer a good compromise for majority of test requirements.

Alethea can assist you in testing with Real clients as well as with Emulated clients. Please reach us at info@alethea.in to discuss your specific requirements.